机器学习:代码 - Machine Learning Code

1. 线性回归(Linear Regression)

1.1 Boston Housing的数据

1.1.1 导入库

1

2

3

4

5

6

7

8

# 导入 NumPy 库:一个用于数值计算的库,提供了大量的数学函数来操作数组。

import numpy as np

# 导入 Matplotlib 的 pyplot 模块:这是一个绘图库,用于创建静态、动态、交互式的可视化图形。

import matplotlib.pyplot as plt

# 从 scikit-learn 库中导入 preprocessing 模块:这个模块提供了几种常用的实用功能,如特征缩放、中心化、标准化和二值化等。

from sklearn import preprocessing

1.1.2 导入数据

1

2

3

4

5

6

7

8

9

# 导入 pandas 库: 用于处理csv文件

import pandas as pd

data_url = "https://lib.stat.cmu.edu/datasets/boston"

# sep="\s+"意味着数据列之间由一个或多个空格分隔。skiprows=22表示跳过前22行,header=None表示数据没有列标题。

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

# 第一部分是raw_df的偶数行(从0开始)的所有列,第二部分是raw_df的奇数行的前两列。并且是水平堆叠

boston_data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

# raw_df的奇数行的第三列作为目标变量

target = raw_df.values[1::2, 2]

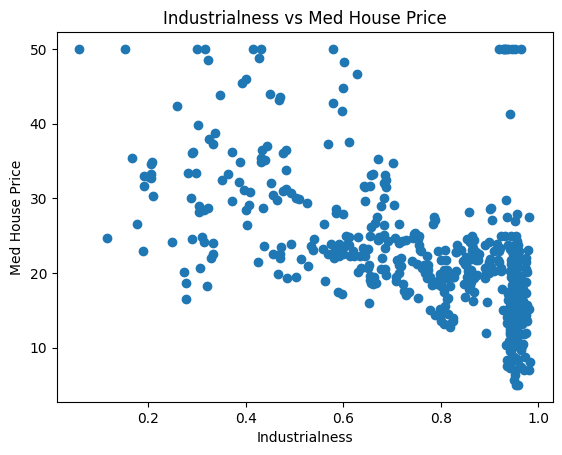

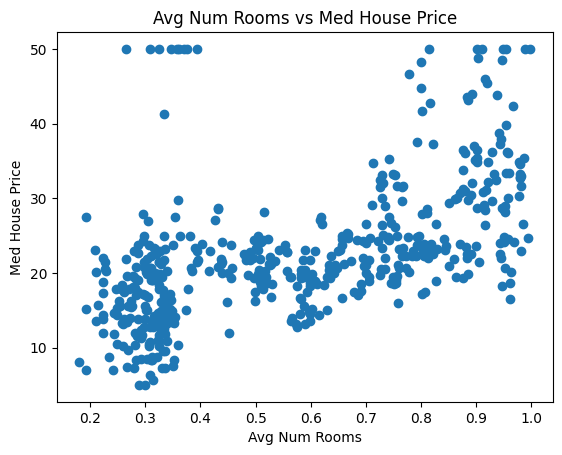

为了使示例简单,将仅使用两个功能:INDUS和RM。 这些和其他功能的解释可在数据页上找到。

1

2

3

4

5

6

7

# 获取bosten数据

data = boston_data;

# 仅仅处理INDUS和RM, 从data中选择所有行但只选择第3和第6列

x_input = data[:, [2,5]]

y_target = target;

# 标准化数据,使其拥有规则性

x_input = preprocessing.normalize(x_input)

1.1.3 可视化(Visualization)

1

2

3

4

5

6

7

8

9

10

11

12

# 对两个特征单独地画图

plt.title('Industrialness vs Med House Price')

plt.scatter(x_input[:, 0], y_target)

plt.xlabel('Industrialness')

plt.ylabel('Med House Price')

plt.show()

plt.title('Avg Num Rooms vs Med House Price')

plt.scatter(x_input[:, 1], y_target)

plt.xlabel('Avg Num Rooms')

plt.ylabel('Med House Price')

plt.show()

1.2 定义一个线性回归模型(Defining a Linear Regression Model)

线性回归模型为: \(f(x)=\mathbf{w}^\top \mathbf{x}+b=w_{1}x_{1}+w_{2}x_{2}+b,\)

np.dot(w, v) for vector dot product

np.dot(W, V) for matrix dot product

1

2

3

4

5

6

def linearmodel(w, b, x):

'''

Input: w 是权重, b 是截距, x 是d维的向量

Output: 预测的输出

'''

return np.dot(w, x) + b

1

2

3

4

5

6

7

8

9

10

11

def linearmat_1(w, b, X):

'''

Input: w 是权重, b 是截距, X 是数据矩阵 (n x d)

Output: 包含线性模型预测的向量

'''

# n 是训练例子的数量

n = X.shape[0]

t = np.zeros(n)

for i in range(n):

t[i] = linearmodel(w, b, X[i, :])

return t

1.2.1 向量化(Vectorization)

1

2

3

4

5

6

7

def linearmat_2(w, X):

'''

linearmat_1的向量化.

Input: w 是权重(包含截距), and X 数据矩阵 (n x (d+1)) (包含特征)

Output:包含线性模型预测的向量

'''

return np.dot(X, w)

1.3 向量化和非向量化代码的速度比较(Comparing speed of the vectorized vs unvectorized code)

非向量化代码的时间

1

2

3

4

5

6

7

import time

w = np.array([1,1])

b = 1

t0 = time.time()

p1 = linearmat_1(w, b, x_input)

t1 = time.time()

print('the time for non-vectorized code is %s' % (t1 - t0))

向量化代码的时间

1

2

3

4

5

6

7

8

# 把截距添加到权重向量

wb = np.array([b, w[0], w[1]])

# 在输入矩阵中添加为1的参数(对应截距)

x_in = np.concatenate([np.ones([np.shape(x_input)[0], 1]), x_input], axis=1)

t0 = time.time()

p2 = linearmat_2(wb, x_in)

t1 = time.time()

print('the time for vectorized code is %s' % (t1 - t0))

1.4 定义损失函数(Defining the Cost Function)

\[C(\mathbf{y}, \mathbf{t}) = \frac{1}{2n}(\mathbf{y}-\mathbf{t})^\top (\mathbf{y}-\mathbf{t}).\]1

2

3

4

5

6

7

8

9

def cost(w, X, y):

'''

评估向量化方法的损失函数

输入 `X` 和输出 `y`, 在权重 `w`.

'''

residual = y - linearmat_2(w, X) # 获取差值

err = np.dot(residual, residual) / (2 * len(y))

return err

例如,假设的损失:

1

cost(wb, x_in, y_target)

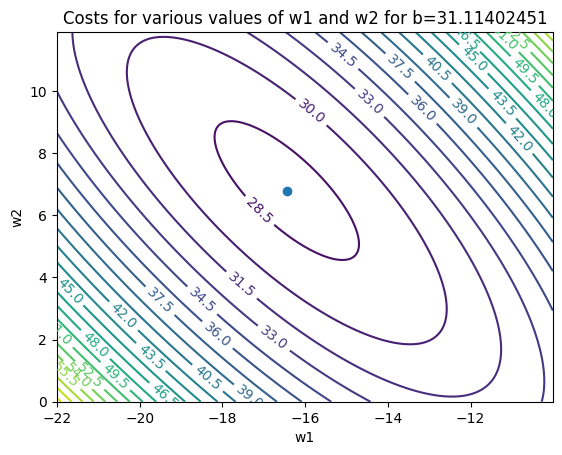

1.5 在权重空间画出损失(Plotting cost in weight space)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

w1s = np.arange(-22, -10, 0.01)

w2s = np.arange(0, 12, 0.1)

b = 31.11402451

W1, W2 = np.meshgrid(w1s, w2s)

z_cost = np.zeros([len(w2s), len(w1s)])

for i in range(W1.shape[0]):

for j in range(W1.shape[1]):

w = np.array([b, W1[i, j], W2[i, j]])

z_cost[i, j] = cost(w, x_in, y_target)

CS = plt.contour(W1, W2, z_cost,25)

plt.clabel(CS, inline=1, fontsize=10)

plt.title('Costs for various values of w1 and w2 for b=31.11402451')

plt.xlabel("w1")

plt.ylabel("w2")

plt.plot([-16.44307658], [6.79809451], 'o')

plt.show()

1.6 精确的解决方法(Exact Solution)

\[\mathbf{w}^*=(X^\top X)^{-1}X^\top y.\]1

2

3

4

5

6

7

8

9

10

11

12

13

14

def solve_exactly(X, y):

'''

精确解决线性回归(完全向量化)

给出 `X` - n x (d+1) 的输入矩阵

`y` - 目标输出

返回(d+1)维的最佳权重向量

'''

A = np.dot(X.T, X)

c = np.dot(X.T, y)

return np.dot(np.linalg.inv(A), c)

w_exact = solve_exactly(x_in, y_target)

print(w_exact)

2. 线性回归的梯度下降(Gradient Descent for Linear Regression)

2.1 Boston Housing的数据

2.1.1 导入库

1

2

3

4

5

6

import matplotlib

import numpy as np

import random

import warnings

import matplotlib.pyplot as plt

from sklearn import preprocessing # for normalization

2.1.2 导入数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

import pandas as pd

import numpy as np

data_url = "https://lib.stat.cmu.edu/datasets/boston"

raw_df = pd.read_csv(data_url, sep="\s+", skiprows=22, header=None)

boston_data = np.hstack([raw_df.values[::2, :], raw_df.values[1::2, :2]])

target = raw_df.values[1::2, 2]

data = boston_data;

x_input = data # a data matrix

y_target = target; # a vector for all outputs

# add a feature 1 to the dataset, then we do not need to consider the bias and weight separately

x_in = np.concatenate([np.ones([np.shape(x_input)[0], 1]), x_input], axis=1)

# we normalize the data so that each has regularity

x_in = preprocessing.normalize(x_in)

2.2 线性模型(Linear Model)

\[f(x)=\mathbf{w}^\top \mathbf{x}.\]1

2

3

4

5

6

7

def linearmat_2(w, X):

'''

linearmat_1的向量化.

Input: w 是权重(包含截距), and X 数据矩阵 (n x (d+1)) (包含特征)

Output:包含线性模型预测的向量

'''

return np.dot(X, w)

2.3 损失函数(Cost Function)

\[C(\mathbf{y}, \mathbf{t}) = \frac{1}{2n}(\mathbf{y}-\mathbf{t})^\top (\mathbf{y}-\mathbf{t}).\]1

2

3

4

5

6

7

8

9

def cost(w, X, y):

'''

评估向量化方法的损失函数

输入 `X` 和输出 `y`, 在权重 `w`.

'''

residual = y - linearmat_2(w, X) # 获取差值

err = np.dot(residual, residual) / (2 * len(y))

return err

2.4 梯度计算(Gradient Computation)

\[\nabla C(\mathbf{w}) =\frac{1}{n}X^\top\big(X\mathbf{w}-\mathbf{y}\big)\]1

2

3

4

5

6

7

8

9

10

11

12

# 向量化梯度方程

def gradfn(weights, X, y):

'''

给出 `weights` - 当前的对权重的猜想

`X` - (N,d+1)的包含特征`1`的输入特征矩阵

`y` - 目标y值

返回当前数值估计的权重梯度

'''

y_pred = np.dot(X, weights)

error = y_pred - y

return np.dot(X.T, error) / len(y)

2.5 梯度下降(Gradient Descent)

\[\mathbf{w}^{(t+1)} \leftarrow \mathbf{w}^{(t)} - \eta\nabla C(\mathbf{w}^{(t)})\]1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

def solve_via_gradient_descent(X, y, print_every=100,

niter=5000, eta=1):

'''

给出 `X` - (N,D)的输入特征矩阵

`y` - 目标y值

`print_every` - 每'print_every' 迭代报告一次性能

`niter` - 迭代数量的限制

`eta` - 学习率

用梯度下降解决线性回归

返回

`w` - 在`niter`次迭代之后的权重

`idx_res` - 迭代的索引

`err_res` - 迭代的索引对应的损失值

'''

N, D = np.shape(X)

# 初始化所有的权重为0

w = np.zeros([D])

idx_res = []

err_res = []

for k in range(niter):

# 计算梯度

dw = gradfn(w, X, y)

# 梯度下降

w = w - eta * dw

# 每print_every迭代报告一次

if k % print_every == print_every - 1:

t_cost = cost(w, X, y)

print('error after %d iteration: %s' % (k, t_cost))

idx_res.append(k)

err_res.append(t_cost)

return w, idx_res, err_res

w_gd, idx_gd, err_gd = solve_via_gradient_descent( X=x_in, y=y_target)

Output(partial):

1

2

3

4

5

6

7

8

9

error after 2199 iteration: 26.616940808457816

error after 2299 iteration: 26.475493515509722

error after 2399 iteration: 26.33686272884545

error after 2499 iteration: 26.20095757351077

...

error after 4699 iteration: 23.78096067719028

error after 4799 iteration: 23.692775341901584

error after 4899 iteration: 23.606193772224405

error after 4999 iteration: 23.521184465124133

2.6 小批量梯度下降(Minibatch Grident Descent)

\(C(\mathbf{w})=\frac{1}{n}\sum_{i=1}^nC_i(\mathbf{w}),\) where $C_i(\mathbf{w})$ is the loss of the model $\mathbf{w}$ on the $i$-th example. In our Boston House Price prediction problem, $C_i$ takes the form $C_i(\mathbf{w})=\frac{1}{2}(\mathbf{w}^\top\mathbf{x}^{(i)}-y^{(i)})^2$.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

def solve_via_minibatch(X, y, print_every=100,

niter=5000, eta=1, batch_size=50):

'''

求解具有 Nesterov 动量的线性回归权重。

给出 `X` - (N,D)的输入特征矩阵

`y` - 目标y值

`print_every` - 每'print_every' 迭代报告一次性能

`niter` - 迭代数量的限制

`eta` - 学习率

`batch_size` - 小批量的大小

返回

`w` - 在`niter`次迭代之后的权重

`idx_res` - 迭代的索引

`err_res` - 迭代的索引对应的损失值

'''

N, D = np.shape(X)

# 初始化所有的权重为0

w = np.zeros([D])

idx_res = []

err_res = []

tset = list(range(N))

for k in range(niter):

idx = random.sample(tset, batch_size)

#sample batch of data

sample_X = X[idx, :]

sample_y = y[idx]

dw = gradfn(w, sample_X, sample_y)

w = w - eta * dw

if k % print_every == print_every - 1:

t_cost = cost(w, X, y)

print('error after %d iteration: %s' % (k, t_cost))

idx_res.append(k)

err_res.append(t_cost)

return w, idx_res, err_res

w_batch, idx_batch, err_batch = solve_via_minibatch( X=x_in, y=y_target)

Output(partial):

1

2

3

4

5

6

7

8

9

error after 2199 iteration: 26.693266124289604

error after 2299 iteration: 26.467262454186802

error after 2399 iteration: 27.126877660242872

error after 2499 iteration: 26.318343441629153

...

error after 4699 iteration: 24.030476040027352

error after 4799 iteration: 23.87462298591681

error after 4899 iteration: 23.6754448557431

error after 4999 iteration: 23.54132978738581

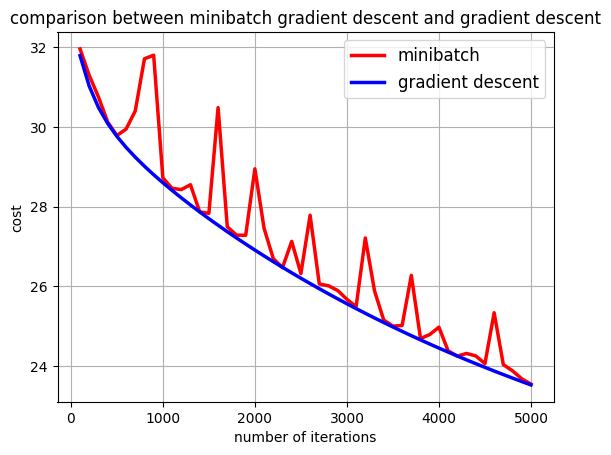

2.7 小批量梯度下降和梯度下降的比较(Comparison between Minibatch Gradient Descent and Gradient Descent)

1

2

3

4

5

6

7

8

plt.plot(idx_batch, err_batch, color="red", linewidth=2.5, linestyle="-", label="minibatch")

plt.plot(idx_gd, err_gd, color="blue", linewidth=2.5, linestyle="-", label="gradient descent")

plt.legend(loc='upper right', prop={'size': 12})

plt.title('comparison between minibatch gradient descent and gradient descent')

plt.xlabel("number of iterations")

plt.ylabel("cost")

plt.grid()

plt.show()

3. 感知器(Perceptron)

3.1 导入库

1

2

3

4

import numpy as np

import random

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

3.2 数据生成(Data Generation)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# `no_points`:表示要生成的数据点的数量。

def generate_data(no_points):

# 创建一个形状为 (no_points, 2) 的零矩阵

X = np.zeros(shape=(no_points, 2))

# 创建一个长度为 no_points 的零向量

Y = np.zeros(shape=no_points)

for ii in range(no_points):

X[ii, 0] = random.randint(0,20)

X[ii, 1] = random.randint(0,20)

if X[ii, 0]+X[ii, 1] > 20:

Y[ii] = 1

else:

Y[ii] = -1

return X, Y

3.3 类(Class)

1

2

3

4

class Person():

def __init__(self, name, age):

self.name = name

self.age = age

1

2

3

4

5

6

7

8

p1 = Person("John", 36)

print(p1.name)

print(p1.age)

'''

John

36

'''

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

class Person():

def __init__(self, name, age):

self.name = name

self.age = age

def myfunc(self):

print("Hello my name is " + self.name)

p1 = Person("John", 36)

p1.myfunc()

'''

Hello my name is John

'''

3.4 感知器逻辑(Perceptron Algorithm)

3.4.1 感知器(Perceptron)

\[\mathbf{x}\mapsto \text{sgn}(\mathbf{w}^\top\mathbf{x}+b)\]3.4.2 感知器逻辑(Perceptron Algorithm)

\[y(b+y+(\mathbf{w}+y\mathbf{x})^\top\mathbf{x})=yb+y\mathbf{w}^\top\mathbf{x}+y^2+y^2\mathbf{x}^\top\mathbf{x}> y(b+\mathbf{w}^\top\mathbf{x}).\]1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

class Perceptron():

"""

Class for performing Perceptron.

X is the input array with n rows (no_examples) and d columns (no_features)

Y is a vector containing elements which indicate the class

(1 for positive class, -1 for negative class)

w is the weight vector (d dimensional vector)

b is the bias value

"""

def __init__(self, b = 0, max_iter = 1000):

# 最大迭代次数

self.max_iter = max_iter

# 权重

self.w = []

# 截距/偏置

self.b = 0

self.no_examples = 0

self.no_features = 0

def train(self, X, Y):

'''

This function applies the perceptron algorithm to train a model w based on X and Y.

It changes both w and b of the class.

'''

# we set the number of examples and the number of features according to the matrix X

self.no_examples, self.no_features = np.shape(X)

# we initialize the weight vector as the zero vector

self.w = np.zeros(self.no_features)

# we only run a limited number of iterations

for ii in range(0, self.max_iter):

# at the begining of each iteration, we set the w_updated to be false (meaning we have not yet found misclassified example)

w_updated = False

# we traverse all the training examples

for jj in range(0, self.no_examples):

# we compute the predicted value and assign it to the variable a

a = self.b + np.dot(self.w, X[jj])

# if we find a misclassified example

if Y[jj] * a <= 0:

# we set w_updated = true as we have found a misclassified example at this iteration

w_updated = True

# we now update w and b

self.w += Y[jj] * X[jj]

self.b += Y[jj]

# if we do not find any misclassified example, we can return the model

if not w_updated:

print("Convergence reached in %i iterations." % ii)

break

# after finishing the iterations we can still find a misclassified example

if w_updated:

print(

"""

WARNING: convergence not reached in %i iterations.

Either dataset is not linearly separable,

or max_iter should be increased

""" % self.max_iter

)

def classify_element(self, x_elem):

'''

This function returns the predicted label of the perceptron on an input x_elem

Input:

x_elem: an input feature vector

Output:

return the predictred label of the model (indicated by w and b) on x_elem

'''

return np.sign(self.b + np.dot(self.w, x_elem))

# To do: insert your code to complete the definition of the function classify a data matrix (n examples)

def classify(self, X):

'''

This function returns the predicted labels of the perceptron on an input matrix X

Input:

X: a data matrix with n rows (no_examples) and d columns (no_features)

Output:

return the vector. i-th entry is the predicted label on the i-th example

'''

# predicted_Y = []

# for ii in range(np.shape(X)[0]):

# # we predict the label and add the label to the output vector

# y_elem = self.classify_element(X[ii])

# predicted_Y.append(y_elem)

# # we return the output vector

# vectorization

out = np.dot(X, self.w)

predicted_Y = np.sign(out + self.b)

return predicted_Y

3.5 实验(Experiments)

3.5.1 数据生成(Data Generation)

1

X, Y = generate_data(100)

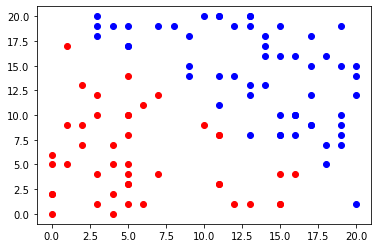

3.5.2 数据集的可视化(Visualization of the dataset)

1

2

3

4

5

6

idx_pos = [i for i in np.arange(100) if Y[i]==1]

idx_neg = [i for i in np.arange(100) if Y[i]==-1]

# make a scatter plot

plt.scatter(X[idx_pos, 0], X[idx_pos, 1], color='blue')

plt.scatter(X[idx_neg, 0], X[idx_neg, 1], color='red')

plt.show()

3.5.3 训练(Train)

1

2

3

4

5

6

7

# Create an instance p

p = Perceptron()

# applies the train algorithm to (X,Y) and sets the weight vector and bias

p.train(X, Y)

predicted_Y = p.classify(X)

acc_tr = accuracy_score(predicted_Y, Y)

print(acc_tr)

3.5.4 测试(Test)

1

2

3

4

5

# we first generate a new dataset

X_test, Y_test = generate_data(100)

predicted_Y_test = p.classify(X_test)

acc = accuracy_score(Y_test, predicted_Y_test)

print(acc)

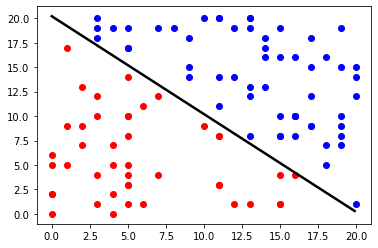

3.5.5 感知器的可视化(Visulization of the perceptron)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# we get an array of the first feature

x1 = np.arange(0, 20, 0.1)

# bias

b = p.b

# weight vector

w = p.w

# we now use list comprehension to generate the array of the second feature

x2 = [(-b-w[0]*x)/w[1] for x in x1]

plt.scatter(X[idx_pos, 0], X[idx_pos, 1], color='blue')

plt.scatter(X[idx_neg, 0], X[idx_neg, 1], color='red')

# plot the hyperplane corresponding to the perceptron

plt.plot(x1, x2, color="black", linewidth=2.5, linestyle="-")

plt.show()

4. 卷积神经网络(Convolutional Neural Network)

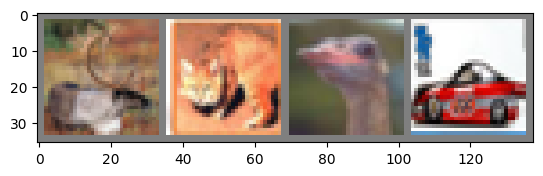

4.1 训练一个图像分类器(Training an image classifier)

- 加载并且用

torchvision标准化 CIFAR10 训练和测试数据集 - 定义一个卷积神经网络

- 定义一个损失函数

- 在训练集上训练网络

- 在测试集上测试网络

4.2 加载并且标准化CIFAR10(Load and normalize CIFAR10)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

import torch

import torchvision

import torchvision.transforms as transforms

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(f"The current device is {device}")

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

batch_size = 4

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batch_size,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

If running on Windows and you get a BrokenPipeError, try setting the num_worker of torch.utils.data.DataLoader() to 0

显示部分训练图像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

import matplotlib.pyplot as plt

import numpy as np

# functions to show an image

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# get some random training images

dataiter = iter(trainloader)

images, labels = next(dataiter)

# show images

imshow(torchvision.utils.make_grid(images))

# print labels

print(' '.join('%5s' % classes[labels[j]] for j in range(batch_size)))

4.3 定义一个卷积神经网络(Define a Convolutional Neural Network)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super().__init__()

# 从3个输入通道到6个输出通道,使用5x5的卷积核

self.conv1 = nn.Conv2d(3, 6, 5)

# 使用2x2的窗口大小并且步长为2

self.pool = nn.MaxPool2d(2, 2)

# 从6个输入通道到16个输出通道,也使用5x5的卷积核

self.conv2 = nn.Conv2d(6, 16, 5)

# 16x5x5的张量展平为120个特征

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# ReLU激活函数

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = torch.flatten(x, 1) # flatten all dimensions except batch

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net().to(device)

4.4 定义一个损失函数与优化器(Define a Loss function and optimizer)

1

2

3

4

5

6

7

# 导入pytorch提供的优化算法

import torch.optim as optim

# 定义了交叉熵损失函数

criterion = nn.CrossEntropyLoss()

# 使用随机梯度下降(SGD)作为优化算法, 设置学习率为0.001, 设置动量为0.9。动量在SGD中用于加速训练并避免陷入局部最小值。

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

4.5 训练网络(Train the network)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# 遍历整个数据集两次

for epoch in range(2):

# 用于累积每个批次的损失,以便后续打印平均损失

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels] and move them to

#the current device

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

# 在每次训练步骤之前,将模型中所有参数的梯度设置为零

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics - epoch and loss

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)

4.6 在测试数据上测试网络(Test the network on the test data)

选择一批测试数据并显示

1

2

3

4

5

6

dataiter = iter(testloader)

images, labels = next(dataiter) #Selects a mini-batch and its labels

# print images

imshow(torchvision.utils.make_grid(images))

print('GroundTruth: ', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

加载预先保存的模型参数

1

2

net = Net()

net.load_state_dict(torch.load(PATH))

在单批数据上预测

1

2

3

4

images = images.to(device)

labels = labels.to(device)

net = net.to(device)

outputs = net(images)

获取预测结果:

1

2

3

4

_, predicted = torch.max(outputs, 1) #Returns a tuple (max,max indicies), we only need the max indicies.

print('Predicted: ', ' '.join('%5s' % classes[predicted[j]]

for j in range(4)))

评估整体测试集的准确性:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

correct = 0

total = 0

# since we're not training, we don't need to calculate the gradients for our outputs

with torch.no_grad():

for data in testloader:

images, labels = data

images = images.to(device)

labels = labels.to(device)

# calculate outputs by running images through the network

outputs = net(images)

# the class with the highest energy is what we choose as prediction

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))

评估每个类的准确性:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

# prepare to count predictions for each class

correct_pred = {classname: 0 for classname in classes}

total_pred = {classname: 0 for classname in classes}

# again no gradients needed

with torch.no_grad():

for data in testloader:

images, labels = data

images = images.to(device)

labels = labels.to(device)

outputs = net(images)

_, predictions = torch.max(outputs, 1)

# collect the correct predictions for each class

for label, prediction in zip(labels, predictions):

if label == prediction:

correct_pred[classes[label]] += 1

total_pred[classes[label]] += 1

# print accuracy for each class

for classname, correct_count in correct_pred.items():

accuracy = 100 * float(correct_count) / total_pred[classname]

print("Accuracy for class {:5s} is: {:.1f} %".format(classname,

accuracy))

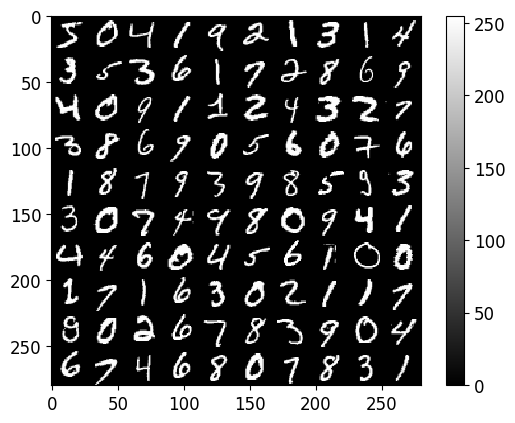

5. 自动编码器(AutoEncoders)

5.1 加载和刷新MNIST(Loading and refreshing MNIST)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

# -*- coding: utf-8 -*- 该文件使用UTF-8编码

# The below is for auto-reloading external modules after they are changed, such as those in ./utils.

# Issue: https://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

# Jupyter Notebook的特定命令,用于自动重新加载外部模块

%load_ext autoreload

%autoreload 2

# `numpy`库用于数组操作,`get_mnist`函数用于获取MNIST数据集

import numpy as np

from utils.data_utils import get_mnist # Helper function. Use it out of the box.

# 常量定义: 数据的存储位置和一个随机种子

DATA_DIR = './data/mnist' # Location we will keep the data.

SEED = 111111

# 使用get_mnist函数从指定的目录加载训练和测试数据。如果数据不在指定位置,它们将被下载

train_imgs, train_lbls = get_mnist(data_dir=DATA_DIR, train=True, download=True)

test_imgs, test_lbls = get_mnist(data_dir=DATA_DIR, train=False, download=True)

# 输出训练和测试数据的相关信息,如其类型、形状、数据类型以及类标签

print("[train_imgs] Type: ", type(train_imgs), "|| Shape:", train_imgs.shape, "|| Data type: ", train_imgs.dtype )

print("[train_lbls] Type: ", type(train_lbls), "|| Shape:", train_lbls.shape, "|| Data type: ", train_lbls.dtype )

print('Class labels in train = ', np.unique(train_lbls))

print("[test_imgs] Type: ", type(test_imgs), "|| Shape:", test_imgs.shape, " || Data type: ", test_imgs.dtype )

print("[test_lbls] Type: ", type(test_lbls), "|| Shape:", test_lbls.shape, " || Data type: ", test_lbls.dtype )

print('Class labels in test = ', np.unique(test_lbls))

# 定义了一些与数据集相关的其他常量,如训练图像的数量、图像的高度、图像的宽度和类别的数量

N_tr_imgs = train_imgs.shape[0] # N hereafter. Number of training images in database.

H_height = train_imgs.shape[1] # H hereafter

W_width = train_imgs.shape[2] # W hereafter

C_classes = len(np.unique(train_lbls)) # C hereafter

'''

[train_imgs] Type: <class 'numpy.ndarray'> || Shape: (60000, 28, 28) || Data type: uint8

[train_lbls] Type: <class 'numpy.ndarray'> || Shape: (60000,) || Data type: int16

Class labels in train = [0 1 2 3 4 5 6 7 8 9]

[test_imgs] Type: <class 'numpy.ndarray'> || Shape: (10000, 28, 28) || Data type: uint8

[test_lbls] Type: <class 'numpy.ndarray'> || Shape: (10000,) || Data type: int16

Class labels in test = [0 1 2 3 4 5 6 7 8 9]

'''

1

2

3

4

5

6

7

8

# Jupyter Notebook特定的命令,保证matplotlib库生成的图像都直接在Notebook内显示

%matplotlib inline

# 导入库,将多个图像绘制在一个网格上

from utils.plotting import plot_grid_of_images # Helper functions, use out of the box.

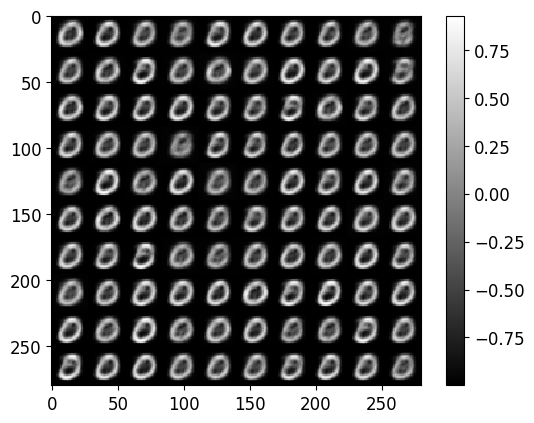

# 绘制了train_imgs中的前100个图像。图像被组织成一个10x10的网格,每行显示10个图像

plot_grid_of_images(train_imgs[0:100], n_imgs_per_row=10)

5.2 数据预处理(Data pre-processing)

5.2.1 将标签的表示更改为长度 C=10 的 one-hot 向量(Change representation of labels to one-hot vectors of length C=10)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# 为训练和测试标签初始化一个全为0的矩阵。每个标签都将在对应的独热编码向量中有一个值为1的元素

# 对于每个训练标签,我们找到其对应的独热编码向量中应该为1的位置,并将该位置的值设置为1

train_lbls_onehot = np.zeros(shape=(train_lbls.shape[0], C_classes ) )

train_lbls_onehot[ np.arange(train_lbls_onehot.shape[0]), train_lbls ] = 1

test_lbls_onehot = np.zeros(shape=(test_lbls.shape[0], C_classes ) )

test_lbls_onehot[ np.arange(test_lbls_onehot.shape[0]), test_lbls ] = 1

# 打印了转换前后标签的类型、形状和数据类型

print("BEFORE: [train_lbls] Type: ", type(train_lbls), "|| Shape:", train_lbls.shape, " || Data type: ", train_lbls.dtype )

print("AFTER : [train_lbls_onehot] Type: ", type(train_lbls_onehot), "|| Shape:", train_lbls_onehot.shape, " || Data type: ", train_lbls_onehot.dtype )

'''

BEFORE: [train_lbls] Type: <class 'numpy.ndarray'> || Shape: (60000,) || Data type: int16

AFTER : [train_lbls_onehot] Type: <class 'numpy.ndarray'> || Shape: (60000, 10) || Data type: float64

'''

5.2.2 重新缩放图像强度,从 [0,255] 到 [-1, +1](Re-scale image intensities, from [0,255] to [-1, +1])

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# This commonly facilitates learning:

# A zero-centered signal with small magnitude allows avoiding exploding/vanishing problems easier.

from utils.data_utils import normalize_int_whole_database # Helper function. Use out of the box.

# 图像的强度值被归一化到了[-1, +1]的范围

train_imgs = normalize_int_whole_database(train_imgs, norm_type="minus_1_to_1")

test_imgs = normalize_int_whole_database(test_imgs, norm_type="minus_1_to_1")

# Lets plot one image.

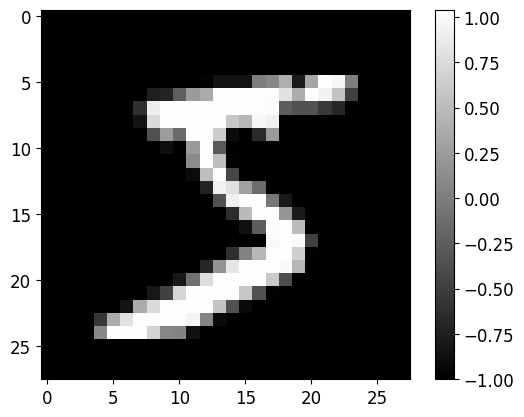

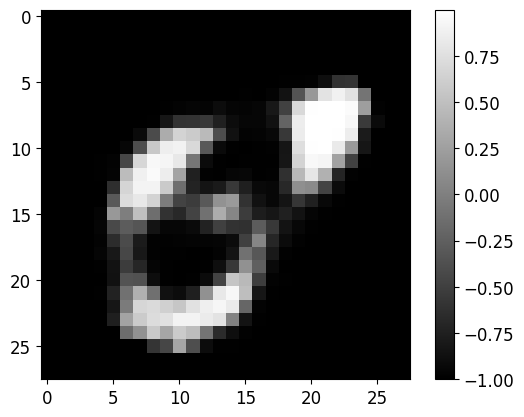

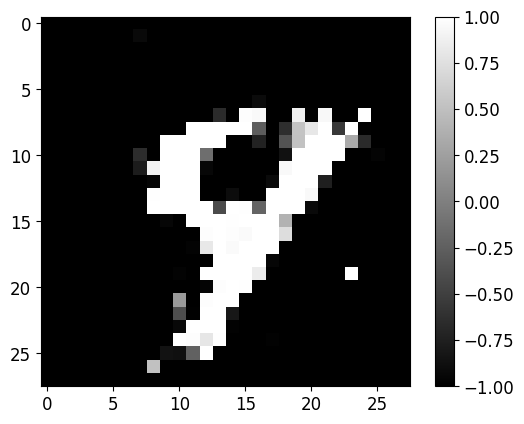

from utils.plotting import plot_image # Helper function, use out of the box.

index = 0 # Try any, up to 60000

print("Plotting image of index: [", index, "]")

print("Class label for this image is: ", train_lbls[index])

print("One-hot label representation: [", train_lbls_onehot[index], "]")

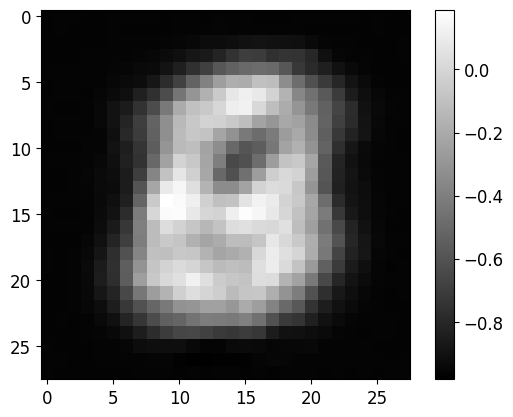

plot_image(train_imgs[index])

# Notice the magnitude of intensities. Black is now negative and white is positive float.

# Compare with intensities of figure further above.

'''

Plotting image of index: [ 0 ]

Class label for this image is: 5

One-hot label representation: [ [0. 0. 0. 0. 0. 1. 0. 0. 0. 0.] ]

'''

5.2.3 将图像从 2D 矩阵展平为 1D 向量。 MLP 将特征向量作为输入,而不是 2D 图像(Flatten the images, from 2D matrices to 1D vectors. MLPs take feature-vectors as input, not 2D images)

1

2

3

4

5

6

7

8

9

10

11

12

13

# 展平图像数据:

# 将每张图像的像素展平成一个一维数组。这通常是在将图像数据输入到全连接神经网络之前所需要做的,因为全连接层需要一维的输入向量

train_imgs_flat = train_imgs.reshape([train_imgs.shape[0], -1]) # Preserve 1st dim (S = num Samples), flatten others.

test_imgs_flat = test_imgs.reshape([test_imgs.shape[0], -1])

print("Shape of numpy array holding the training database:")

print("Original : [N, H, W] = [", train_imgs.shape , "]")

print("Flattened: [N, H*W] = [", train_imgs_flat.shape , "]")

'''

Shape of numpy array holding the training database:

Original : [N, H, W] = [ (60000, 28, 28) ]

Flattened: [N, H*W] = [ (60000, 784) ]

'''

5.3 为了AE用SGD进行无监督训练(Unsupervised training with SGD for Auto-Encoders)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

from utils.plotting import plot_train_progress_1, plot_grids_of_images # Use out of the box

# 从训练数据中随机抽取一个批次的图片

def get_random_batch(train_imgs, train_lbls, batch_size, rng):

# train_imgs: Images. Numpy array of shape [N, H, W]

# train_lbls: Labels of images. None, or Numpy array of shape [N, C_classes], one hot label for each image.

# batch_size: integer. Size that the batch should have.

####### Sample a random batch of images for training. Fill in the blanks (???) #########

indices = range(0, batch_size) # Remove this line after you fill-in and un-comment the below.

indices = rng.randint(low=0, high=train_imgs.shape[0], size=batch_size, dtype='int32')

#indices = rng.randint(low=??????, high=train_imgs.shape[???????], size=?????????, dtype='int32')

##############################################################################################

train_imgs_batch = train_imgs[indices]

if train_lbls is not None: # Enables function to be used both for supervised and unsupervised learning

train_lbls_batch = train_lbls[indices]

else:

train_lbls_batch = None

return [train_imgs_batch, train_lbls_batch]

def unsupervised_training_AE(net,

loss_func,

rng,

train_imgs_all,

batch_size,

learning_rate,

total_iters,

iters_per_recon_plot=-1):

# net: 自编码器网络对象 Instance of a model. See classes: Autoencoder, MLPClassifier, etc further below

# loss_func: 用于计算损失的函数 Function that computes the loss. See functions: reconstruction_loss or cross_entropy.

# rng: 随机数生成器对象 numpy random number generator

# train_imgs_all: 训练图像的完整集合 All the training images. Numpy array, shape [N_tr, H, W]

# batch_size: 每次迭代用于训练的图像数量 Size of the batch that should be processed per SGD iteration by a model.

# learning_rate: 优化器的学习率 self explanatory.

# total_iters: 训练总迭代次数 how many SGD iterations to perform.

# iters_per_recon_plot: 每隔多少迭代绘制一次重构图像,默认为-1,表示不绘制 Integer. Every that many iterations the model predicts training images ...

# ...and we plot their reconstruction. For visual observation of the results.

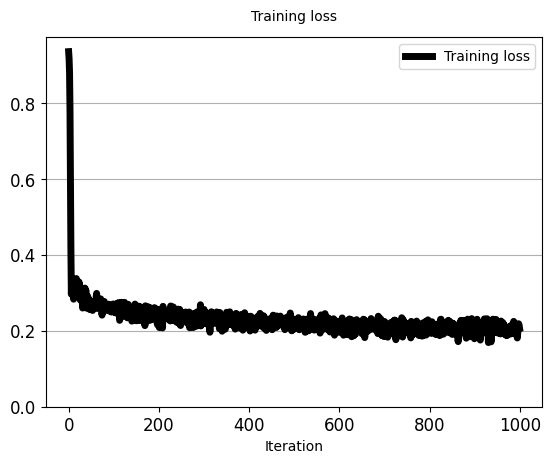

# 初始化一个空列表,用于存储训练过程中的损失值。

loss_values_to_plot = []

# 创建一个Adam优化器,用于更新网络 net 的参数。

optimizer = optim.Adam(net.params, lr=learning_rate) # Will use PyTorch's Adam optimizer out of the box

# 随机梯度下降(SGD)

for t in range(total_iters):

# Sample batch for this SGD iteration

x_imgs, _ = get_random_batch(train_imgs_all, None, batch_size, rng)

# Forward pass: 通过网络执行前向传播,获取重构的图像和编码的潜在表示。

x_pred, z_codes = net.forward_pass(x_imgs)

# Compute loss: 计算重构图像和原始图像之间的损失。

loss = loss_func(x_pred, x_imgs)

# Pytorch way

# 在每次梯度更新前清零累积的梯度。

optimizer.zero_grad()

# 执行反向传播,计算损失相对于网络参数的梯度。

_ = net.backward_pass(loss)

# 应用梯度更新网络参数。

optimizer.step()

# ==== Report training loss and accuracy ======

loss_np = loss if type(loss) is type(float) else loss.item() # Pytorch returns tensor. Cast to float

print("[iter:", t, "]: Training Loss: {0:.2f}".format(loss))

loss_values_to_plot.append(loss_np)

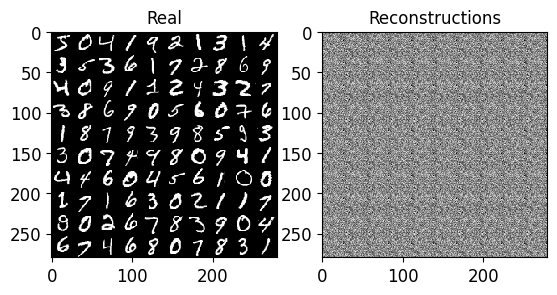

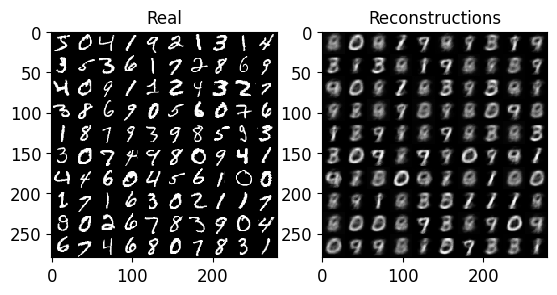

# =============== Every few iterations, show reconstructions ================#

if t==total_iters-1 or t%iters_per_recon_plot == 0:

# Reconstruct all images, to plot reconstructions.

x_pred_all, z_codes_all = net.forward_pass(train_imgs_all)

# Cast tensors to numpy arrays

x_pred_all_np = x_pred_all if type(x_pred_all) is np.ndarray else x_pred_all.detach().numpy()

# Predicted reconstructions have vector shape. Reshape them to original image shape.

train_imgs_resh = train_imgs_all.reshape([train_imgs_all.shape[0], H_height, W_width])

x_pred_all_np_resh = x_pred_all_np.reshape([train_imgs_all.shape[0], H_height, W_width])

# Plot a few images, originals and predicted reconstructions.

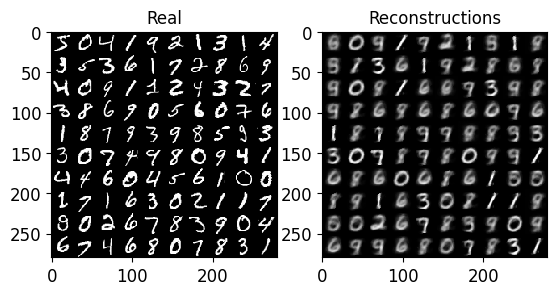

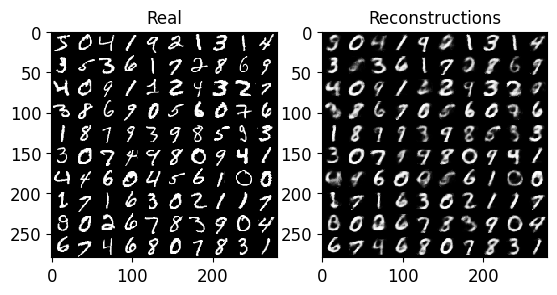

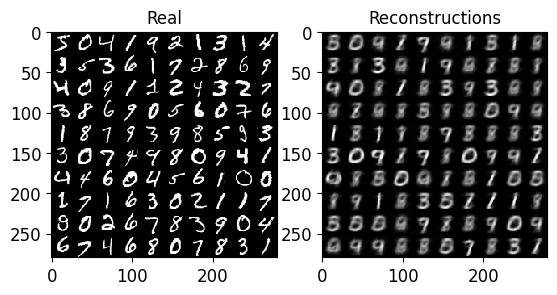

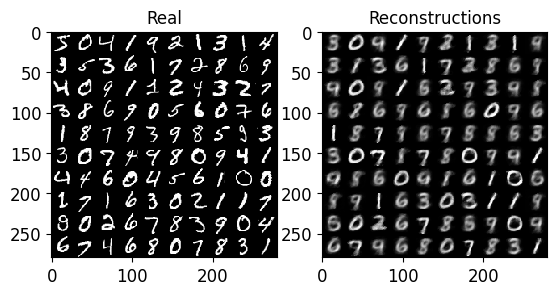

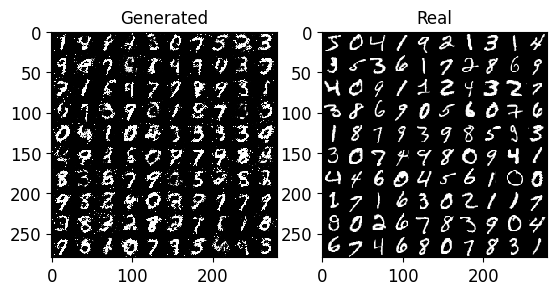

plot_grids_of_images([train_imgs_resh[0:100], x_pred_all_np_resh[0:100]],

titles=["Real", "Reconstructions"],

n_imgs_per_row=10,

dynamically=True)

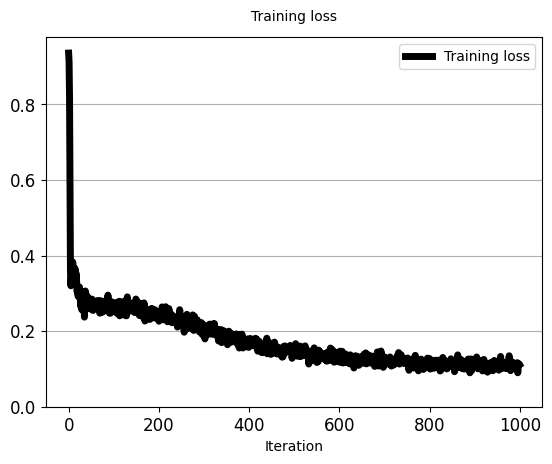

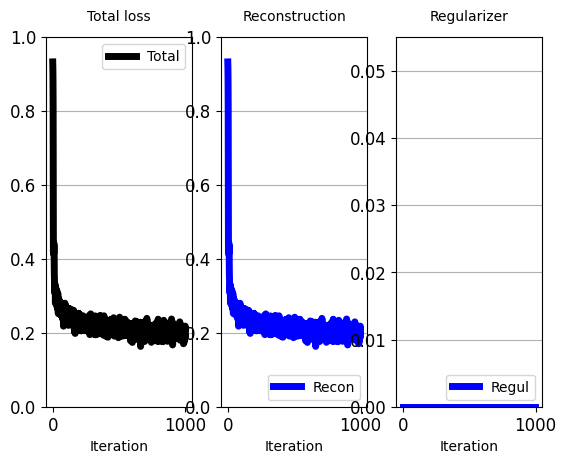

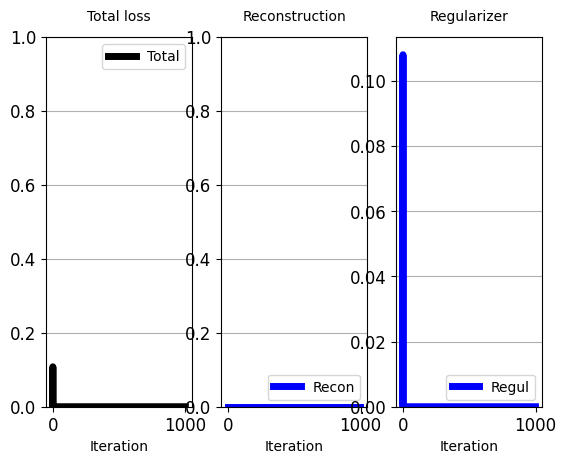

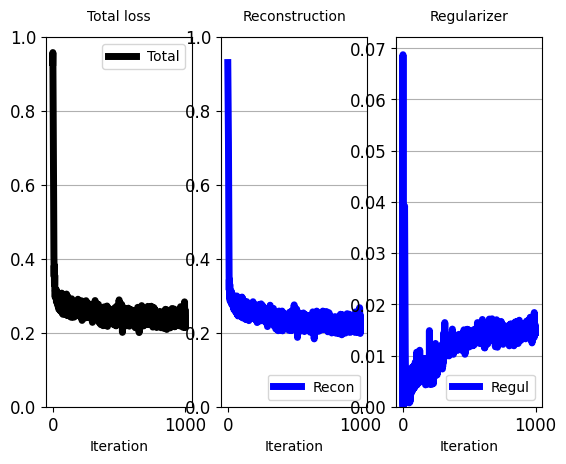

# In the end of the process, plot loss.

plot_train_progress_1(loss_values_to_plot, iters_per_point=1)

5.4 自动编码器(Auto-Encoder)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

# -*- coding: utf-8 -*-

import torch

import torch.optim as optim

import torch.nn as nn

# 定义了一个基本的神经网络结构和反向传播

class Network():

def backward_pass(self, loss):

# Performs back propagation and computes gradients

# With PyTorch, we do not need to compute gradients analytically for parameters were requires_grads=True,

# Calling loss.backward(), torch's Autograd automatically computes grads of loss wrt each parameter p,...

# ... and **puts them in p.grad**. Return them in a list.

loss.backward()

grads = [param.grad for param in self.params]

return grads

# 定义了一个四层的自编码器。网络由输入层、编码器隐藏层、瓶颈层、解码器隐藏层和输出层组成

class Autoencoder(Network):

def __init__(self, rng, D_in, D_hid_enc, D_bottleneck, D_hid_dec):

# Construct and initialize network parameters

D_in = D_in # Dimension of input feature-vectors. Length of a vectorised image.

D_hid_1 = D_hid_enc # Dimension of Encoder's hidden layer

D_hid_2 = D_bottleneck

D_hid_3 = D_hid_dec # Dimension of Decoder's hidden layer

D_out = D_in # Dimension of Output layer.

self.D_bottleneck = D_bottleneck # Keep track of it, we will need it.

##### TODO: Initialize the Auto-Encoder's parameters. Also see forward_pass(...)) ########

# Dimensions of parameter tensors are (number of neurons + 1) per layer, to account for +1 bias.

# 初始化权重的值, 根据正态随机分布

w1_init = rng.normal(loc=0.0, scale=0.01, size=(D_in+1, D_hid_1))

w2_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_1+1, D_hid_2))

w3_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_2+1, D_hid_3))

w4_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_3+1, D_out))

# Pytorch tensors, parameters of the model

# Use the above numpy arrays as of random floats as initialization for the Pytorch weights.

# 权重被转换为tensor张量

w1 = torch.tensor(w1_init, dtype=torch.float, requires_grad=True)

w2 = torch.tensor(w2_init, dtype=torch.float, requires_grad=True)

w3 = torch.tensor(w3_init, dtype=torch.float, requires_grad=True)

w4 = torch.tensor(w4_init, dtype=torch.float, requires_grad=True)

# Keep track of all trainable parameters:

self.params = [w1, w2, w3, w4]

###########################################################################

# 定义了输入如何通过自编码器的各层进行处理。它对编码器和解码器隐藏层使用ReLU激活函数(preact),并对输出层使用tanh激活函数

def forward_pass(self, batch_imgs):

# Get parameters

[w1, w2, w3, w4] = self.params

# 将输入数据转换为torch张量

batch_imgs_t = torch.tensor(batch_imgs, dtype=torch.float) # Makes pytorch array to pytorch tensor.

# 添加bias单元

unary_feature_for_bias = torch.ones(size=(batch_imgs.shape[0], 1)) # [N, 1] column vector.

x = torch.cat((batch_imgs_t, unary_feature_for_bias), dim=1) # Extra feature=1 for bias.

#### TODO: Implement the operations at each layer #####

# Layer 1

h1_preact = x.mm(w1) # 计算预激活值

h1_act = h1_preact.clamp(min=0) # 应用ReLU激活函数

# Layer 2 (bottleneck):

h1_ext = torch.cat((h1_act, unary_feature_for_bias), dim=1) # 添加bias单元到隐藏层1

h2_preact = h1_ext.mm(w2) # 计算预激活值

h2_act = h2_preact.clamp(min=0) # 应用ReLU激活函数

# Layer 3:

h2_ext = torch.cat((h2_act, unary_feature_for_bias), dim=1) # 添加bias单元到隐藏层2

h3_preact = h2_ext.mm(w3) # 计算预激活值

h3_act = h3_preact.clamp(min=0) # 应用ReLU激活函数

# Layer 4 (output):

h3_ext = torch.cat((h3_act, unary_feature_for_bias), dim=1) # 添加bias单元到隐藏层3

h4_preact = h3_ext.mm(w4) # 计算预激活值

h4_act = torch.tanh(h4_preact) # 应用tanh激活函数

# Output layer

x_pred = h4_act

#######################################################

### TODO: Get bottleneck's activations ######

# Bottleneck actications

acts_bottleneck = h2_act

#############################################

return (x_pred, acts_bottleneck)

# 计算重建图像与原始图像之间的均方误差。这个损失在训练过程中用于调整网络的权重

def reconstruction_loss(x_pred, x_real, eps=1e-7):

# Cross entropy: See Lecture 5, slide 19.

# x_pred: [N, D_out] Prediction returned by forward_pass. Numpy array of shape [N, D_out]

# x_real: [N, D_in]

# If number array is given, change it to a Torch tensor.

x_pred = torch.tensor(x_pred, dtype=torch.float) if type(x_pred) is np.ndarray else x_pred

x_real = torch.tensor(x_real, dtype=torch.float) if type(x_real) is np.ndarray else x_real

######## TODO: Complete the calculation of Reconstruction loss for each sample ###########

loss_recon = torch.mean(torch.square(x_pred - x_real), dim=1)

# NOTE: Notice a difference from theory in Lecture: In implementations, we often calculate...

# the *mean* square error over output's dimensions, rather than the *sum* as often shown in theory.

# This makes the loss independent of the dimensionality of the input/output, so it can be used

# without any change for different architectures and image sizes.

# Otherwise we'd have to adapt the Learning rate whenever we use a network for different image sizes

# to account for the change of the loss's scale.

##########################################################################################

cost = torch.mean(loss_recon, dim=0) # Expectation of loss: Mean over samples (axis=0).

return cost

# Create the network

rng = np.random.RandomState(seed=SEED)

autoencoder_thin = Autoencoder(rng=rng,

D_in=H_height*W_width,

D_hid_enc=256,

D_bottleneck=2,

D_hid_dec=256)

# Start training

# 定义了自编码器的训练循环。过程包括:

# 随机抽取图像批次。

# 通过自编码器处理图像以获得重构的输出。

# 计算重构和原始图像之间的损失。

# 反向传播来更新网络的权重。

# 可选择地每隔几次迭代绘制重构的图像进行可视化。

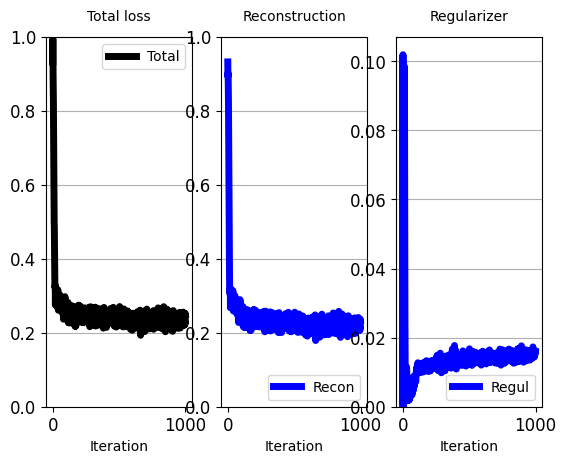

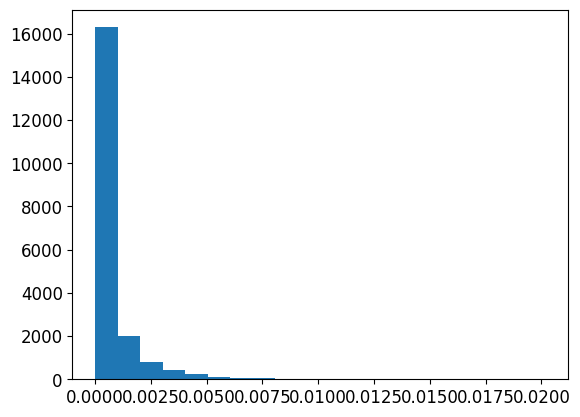

unsupervised_training_AE(autoencoder_thin,

reconstruction_loss,

rng,

train_imgs_flat,

batch_size=40,

learning_rate=3e-3,

total_iters=1000,

iters_per_recon_plot=50)

'''

[iter: 0 ]: Training Loss: 0.94

[iter: 1 ]: Training Loss: 0.92

[iter: 2 ]: Training Loss: 0.88

[iter: 3 ]: Training Loss: 0.78

[iter: 4 ]: Training Loss: 0.60

[iter: 5 ]: Training Loss: 0.42

[iter: 6 ]: Training Loss: 0.30

[iter: 7 ]: Training Loss: 0.33

[iter: 8 ]: Training Loss: 0.31

[iter: 9 ]: Training Loss: 0.32

[iter: 10 ]: Training Loss: 0.32

[iter: 11 ]: Training Loss: 0.28

[iter: 12 ]: Training Loss: 0.32

[iter: 13 ]: Training Loss: 0.32

[iter: 14 ]: Training Loss: 0.32

[iter: 15 ]: Training Loss: 0.31

[iter: 16 ]: Training Loss: 0.32

[iter: 17 ]: Training Loss: 0.34

[iter: 18 ]: Training Loss: 0.33

[iter: 19 ]: Training Loss: 0.32

[iter: 20 ]: Training Loss: 0.29

[iter: 21 ]: Training Loss: 0.30

[iter: 22 ]: Training Loss: 0.31

[iter: 23 ]: Training Loss: 0.33

[iter: 24 ]: Training Loss: 0.32

[iter: 25 ]: Training Loss: 0.29

...

[iter: 47 ]: Training Loss: 0.26

[iter: 48 ]: Training Loss: 0.27

[iter: 49 ]: Training Loss: 0.28

[iter: 50 ]: Training Loss: 0.27

'''

5.5 对潜在(瓶颈)表示中的所有训练样本进行编码(Encode all training samples in the latent (bottleneck) representation)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

import matplotlib.pyplot as plt

# 接受的参数包括一个网络、一组扁平化的图像、标签、批处理大小、总迭代次数和一个布尔值决定是否绘制二维嵌入

def encode_and_get_min_max_z(net,

imgs_flat,

lbls,

batch_size,

total_iterations=None,

plot_2d_embedding=True):

# This function encodes images, plots the first 2 dimensions of the codes in a plot, and finally...

# ... returns the minimum and maximum values of the codes for each dimensions of Z.

# ... We will use this at a layer task.

# Arguments:

# imgs_flat: Numpy array of shape [Number of images, H * W]

# lbls: Numpy array of shape [number of images], with 1 integer per image. The integer is the class (digit).

# total_iterations: How many batches to encode. We will use this so that we dont encode and plot ...

# ... the whoooole training database, because the plot will get cluttered with 60000 points.

# Returns:

# min_z: numpy array, vector with [dimensions-of-z] elements. Minimum value per dimension of z.

# max_z: numpy array, vector with [dimensions-of-z] elements. Maximum value per dimension of z.

# If total iterations is None, the function will just iterate over all data, by breaking them into batches.

if total_iterations is None:

total_iterations = (train_imgs_flat.shape[0] - 1) // batch_size + 1

z_codes_all = []

lbls_all = []

for t in range(total_iterations):

# Sample batch for this SGD iteration

x_batch = imgs_flat[t*batch_size: (t+1)*batch_size]

lbls_batch = lbls[t*batch_size: (t+1)*batch_size]

# Forward pass:执行前向传递得到预测的图像和编码值

x_pred, z_codes = net.forward_pass(x_batch)

# 如果编码值不是numpy数组,则将其转换为numpy数组

z_codes_np = z_codes if type(z_codes) is np.ndarray else z_codes.detach().numpy()

# 将编码值和标签存储在列表中

z_codes_all.append(z_codes_np) # List of np.arrays

lbls_all.append(lbls_batch)

z_codes_all = np.concatenate(z_codes_all) # Make list of arrays in one array by concatenating along dim=0 (image index)

lbls_all = np.concatenate(lbls_all)

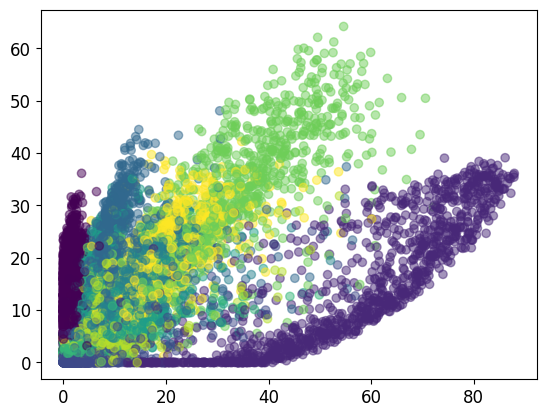

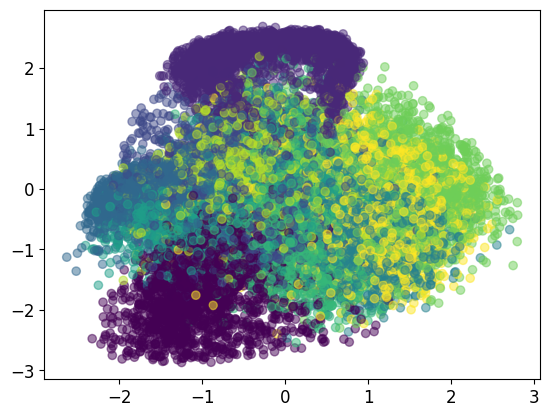

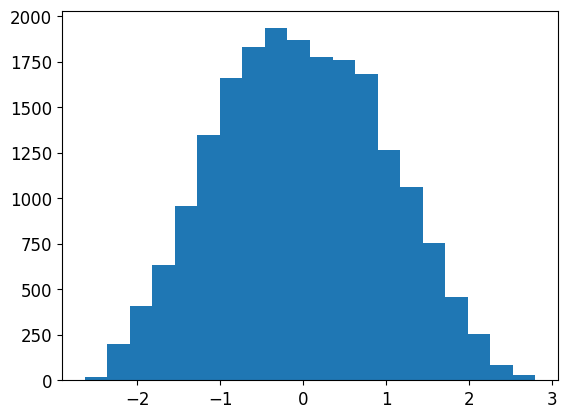

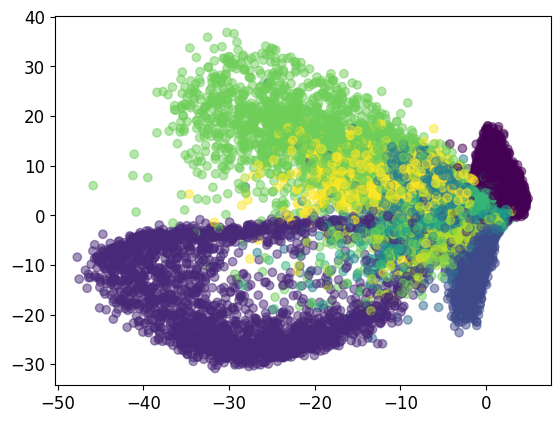

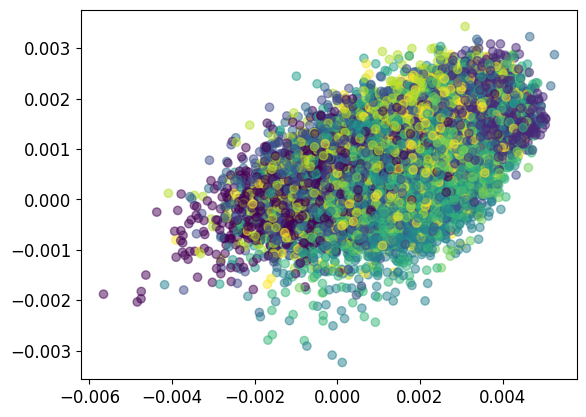

if plot_2d_embedding:

# Plot the codes with different color per class in a scatter plot:

plt.scatter(z_codes_all[:,0], z_codes_all[:,1], c=lbls_all, alpha=0.5) # Plot the first 2 dimensions.

plt.show()

# 计算并返回编码值的每个维度的最小和最大值

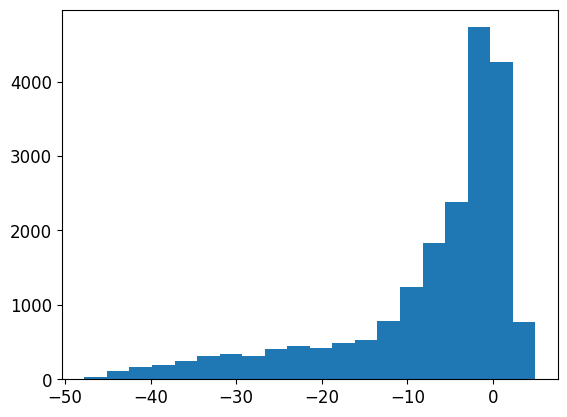

min_z = np.min(z_codes_all, axis=0) # min and max for each dimension of z, over all samples.

max_z = np.max(z_codes_all, axis=0) # Numpy array (vector) of shape [number of z dimensions]

return min_z, max_z

# Encode training samples, and get the min and max values of the z codes (for each dimension)

min_z, max_z = encode_and_get_min_max_z(autoencoder_thin,

train_imgs_flat,

train_lbls,

batch_size=100,

total_iterations=100)

print("Min Z value per dimension of bottleneck:", min_z)

print("Max Z value per dimension of bottleneck:", max_z)

'''

Min Z value per dimension of bottleneck: [0. 0.]

Max Z value per dimension of bottleneck: [87.92656 64.17436]

'''

5.6 用一个大的瓶颈层训练AE(Train an Auto-Encoder with a larger bottleneck layer)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# 这个任务的目标是检查一个更宽的自编码器如何进行训练,以及它的性能如何

# The below is a copy paste from Task 2.

# Create the network

rng = np.random.RandomState(seed=SEED)

autoencoder_wide = Autoencoder(rng=rng,

D_in=H_height*W_width,

D_hid_enc=256,

D_bottleneck=32,

D_hid_dec=256)

# Start training

unsupervised_training_AE(autoencoder_wide,

reconstruction_loss,

rng,

train_imgs_flat,

batch_size=40,

learning_rate=3e-3,

total_iters=1000,

iters_per_recon_plot=50)

'''

[iter: 968 ]: Training Loss: 0.10

[iter: 969 ]: Training Loss: 0.11

[iter: 970 ]: Training Loss: 0.10

[iter: 971 ]: Training Loss: 0.12

[iter: 972 ]: Training Loss: 0.11

[iter: 973 ]: Training Loss: 0.10

[iter: 974 ]: Training Loss: 0.12

[iter: 975 ]: Training Loss: 0.12

...

[iter: 996 ]: Training Loss: 0.11

[iter: 997 ]: Training Loss: 0.11

[iter: 998 ]: Training Loss: 0.11

[iter: 999 ]: Training Loss: 0.11

'''

5.7 基本自动编码器是否适合合成新数据?(Is basic Auto-Encoder appropriate for synthesizing new data?)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

class Decoder():

def __init__(self, pretrained_ae):

############ TODO: Fill in the gaps. The aim is: ... ############

# ... to use the weights of the pre-trained AE's decoder,... ####

# ... to initialize this Decoder. ####

# Reminder: pretrained_ae.params[LAYER] contrains the params of the corresponding layer. See Task 2.

# 从预先训练的自编码器中提取解码器的权重参数,并将它们转化为Pytorch张量

w1 = torch.tensor(pretrained_ae.params[2], dtype=torch.float, requires_grad=False)

w2 = torch.tensor(pretrained_ae.params[3], dtype=torch.float, requires_grad=False)

self.params = [w1, w2]

###########################################################################

def decode(self, z_batch):

# Reconstruct a batch of images from a batch of z codes.

# z_batch: Random codes. Numpy array of shape: [batch size, number of z dimensions]

[w1, w2] = self.params

z_batch_t = torch.tensor(z_batch, dtype=torch.float) # Making a Pytorch tensor from Numpy array.

# Adding an activation with value 1, for the bias. Similar to Task 2.

unary_feature_for_bias = torch.ones(size=(z_batch_t.shape[0], 1)) # [N, 1] column vector.

##### TODO: Fill in the gaps, to REPLICATE the decoder of the AE from Task 4 #####

# Hidden Layer of Decoder:

z_batch_act_ext = torch.cat((z_batch_t, unary_feature_for_bias), dim=1)# 添加bias单元到隐藏层

h1_preact = z_batch_act_ext.mm(w1)# 计算预激活值

h1_act = h1_preact.clamp(min=0)# 应用ReLU函数

# Output Layer:

h1_ext = torch.cat((h1_act, unary_feature_for_bias), dim=1)# 添加bias单元到隐藏层1

h2_preact = h1_ext.mm(w2)# 计算预及或者

h2_act = torch.tanh(h2_preact)# 应用ReLU函数

##################################################################################

# Output

x_pred = h2_act

return x_pred

# Lets instantiate this Decoder, using the pre-trained AE with 32-dims ("wider") bottleneck:

net_decoder_pretrained = Decoder(autoencoder_wide)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

# 了解更宽瓶颈的自编码器编码的z值的范围

# NOTE: This function was implemented in Task 3. We simply call it again, but for a different AE, the wider.

# Encode training samples, and get the min and max values of the z codes (for each dimension)

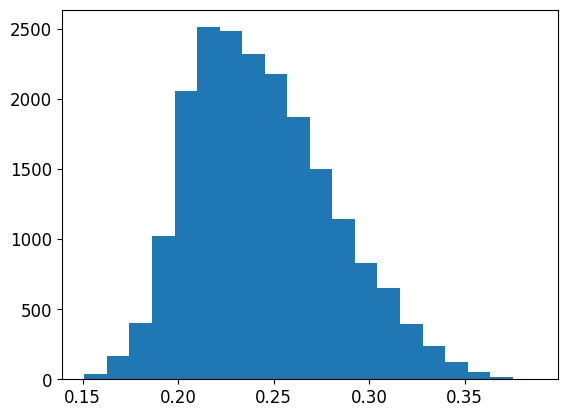

min_z_wider, max_z_wider = encode_and_get_min_max_z(autoencoder_wide,

train_imgs_flat,

train_lbls,

batch_size=100,

total_iterations=None, # So that it runs over all data.

plot_2d_embedding=False) # Code is 32-Dims. Cant plot in 2D

print("Min Z value per dimension:", min_z_wider)

print("Max Z value per dimension:", max_z_wider)

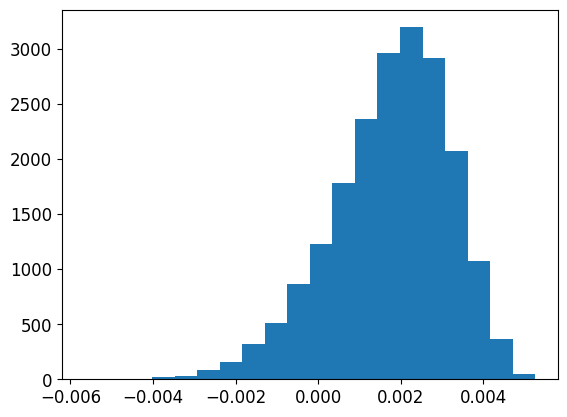

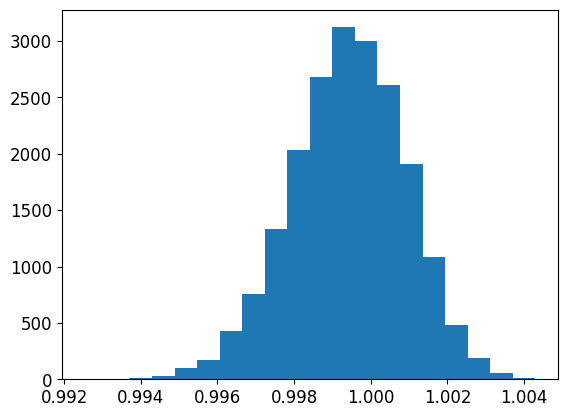

'''

Min Z value per dimension: [0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. 0. 0. 0. 0. 0. 0.]

Max Z value per dimension: [ 0. 0. 42.286503 0. 41.441685 0. 0.

0. 0. 0. 0. 0. 0. 0.

0. 49.99855 39.896553 37.461414 0. 39.914013 0.

35.367657 0. 41.54573 36.36666 0. 0. 43.863304

0. 0. 0. 38.31358 ]

'''

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

def synthesize(net_decoder,

rng,

z_min,

z_max,

n_samples):

# net_decoder: 有预先训练权重的解码器

# z_min: numpy array (vector) of shape [dimensions-of-z]

# z_max: numpy array (vector) of shape [dimensions-of-z]

# n_samples: how many samples to produce.

assert len(z_min.shape) == 1 and len(z_max.shape) == 1

assert z_min.shape[0] == z_max.shape[0]

z_dims = z_min.shape[0] # Dimensionality of z codes (and input to decoder).

# 在[0, 1)的范围内均匀地随机生成z的样本,生成随机潜在编码

z_samples = np.random.random_sample([n_samples, z_dims]) # Returns samples from uniform([0, 1))

z_samples = z_samples * (z_max - z_min) # Scales [0,1] range ==> [0,(max-min)] range

z_samples = z_samples + z_min # Puts the [0,(max-min)] range ==> [min, max] range

# 使用预先训练的解码器网络net_decoder将z样本解码为x样本

x_samples = net_decoder.decode(z_samples)

x_samples_np = x_samples if type(x_samples) is np.ndarray else x_samples.detach().numpy() # torch to numpy

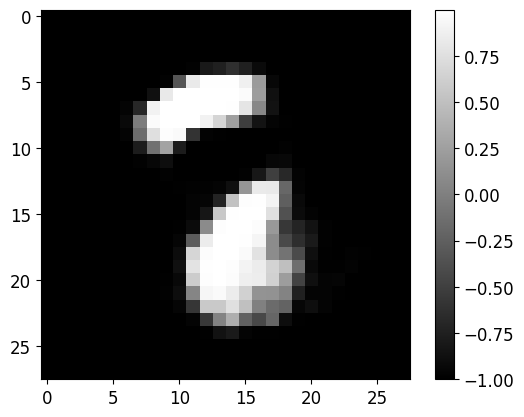

for x_sample in x_samples_np:

plot_image(x_sample.reshape([H_height, W_width]))

# Lets finally run the synthesis and see what happens...

rng = np.random.RandomState(seed=SEED)

synthesize(net_decoder_pretrained,

rng,

min_z_wider, # From further above

max_z_wider, # From further above

n_samples=20)

5.8 使用 AE 从未标记数据中学习,以在标记数据有限时补充监督分类器:让我们首先“从头开始”训练一个监督分类器(Learning from Unlabelled data with AE, to complement Supervised Classifier when Labelled data are limited: Lets first train a supervised Classifier ‘from scratch’)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

class Classifier_3layers(Network):

# 隐藏层使用ReLU激活函数,输出层使用softmax函数来计算类概率

def __init__(self, D_in, D_hid_1, D_hid_2, D_out, rng):

D_in = D_in

D_hid_1 = D_hid_1

D_hid_2 = D_hid_2

D_out = D_out

# === NOTE: Notice that this is exactly the same architecture as encoder of AE in Task 4 ====

w_1_init = rng.normal(loc=0.0, scale=0.01, size=(D_in+1, D_hid_1))

w_2_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_1+1, D_hid_2))

w_out_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_2+1, D_out))

w_1 = torch.tensor(w_1_init, dtype=torch.float, requires_grad=True)

w_2 = torch.tensor(w_2_init, dtype=torch.float, requires_grad=True)

w_out = torch.tensor(w_out_init, dtype=torch.float, requires_grad=True)

self.params = [w_1, w_2, w_out]

def forward_pass(self, batch_inp):

# compute predicted y

[w_1, w_2, w_out] = self.params

# In case input is image, make it a tensor.

batch_imgs_t = torch.tensor(batch_inp, dtype=torch.float) if type(batch_inp) is np.ndarray else batch_inp

unary_feature_for_bias = torch.ones(size=(batch_imgs_t.shape[0], 1)) # [N, 1] column vector.

x = torch.cat((batch_imgs_t, unary_feature_for_bias), dim=1) # Extra feature=1 for bias.

# === NOTE: This is the same architecture as encoder of AE in Task 4, with extra classification layer ===

# Layer 1

h1_preact = x.mm(w_1)

h1_act = h1_preact.clamp(min=0)

# Layer 2 (corresponds to bottleneck of the AE):

h1_ext = torch.cat((h1_act, unary_feature_for_bias), dim=1)

h2_preact = h1_ext.mm(w_2)

h2_act = h2_preact.clamp(min=0)

# Output classification layer

h2_ext = torch.cat((h2_act, unary_feature_for_bias), dim=1)

h_out = h2_ext.mm(w_out)

logits = h_out

# === Addition of a softmax function for

# Softmax activation function.

exp_logits = torch.exp(logits)

y_pred = exp_logits / torch.sum(exp_logits, dim=1, keepdim=True) # 使用softmax输出概率

# sum with Keepdim=True returns [N,1] array. It would be [N] if keepdim=False.

# Torch broadcasts [N,1] to [N,D_out] via repetition, to divide elementwise exp_h2 (which is [N,D_out]).

return y_pred

# 计算预测的类概率与真实类标签之间的交叉熵损失

# 在进行对数运算时,为了数值稳定性,添加了一个epsilon(eps)

def cross_entropy(y_pred, y_real, eps=1e-7):

# y_pred: Predicted class-posterior probabilities, returned by forward_pass. Numpy array of shape [N, D_out]

# y_real: One-hot representation of real training labels. Same shape as y_pred.

# If number array is given, change it to a Torch tensor.

y_pred = torch.tensor(y_pred, dtype=torch.float) if type(y_pred) is np.ndarray else y_pred

y_real = torch.tensor(y_real, dtype=torch.float) if type(y_real) is np.ndarray else y_real

x_entr_per_sample = - torch.sum( y_real*torch.log(y_pred+eps), dim=1) # Sum over classes, axis=1

loss = torch.mean(x_entr_per_sample, dim=0) # Expectation of loss: Mean over samples (axis=0).

return loss

from utils.plotting import plot_train_progress_2

def train_classifier(classifier,

pretrained_AE,

loss_func,

rng,

train_imgs,

train_lbls,

test_imgs,

test_lbls,

batch_size,

learning_rate,

total_iters,

iters_per_test=-1):

# Arguments:

# classifier: A classifier network. It will be trained by this function using labelled data.

# Its input will be either original data (if pretrained_AE=0), ...

# ... or the output of the feature extractor if one is given.

# pretrained_AE: A pretrained AutoEncoder that will *not* be trained here.

# It will be used to encode input data.

# The classifier will take as input the output of this feature extractor.

# If pretrained_AE = None: The classifier will simply receive the actual data as input.

# train_imgs: Vectorized training images

# train_lbls: One hot labels

# test_imgs: Vectorized testing images, to compute generalization accuracy.

# test_lbls: One hot labels for test data.

# batch_size: batch size

# learning_rate: come on...

# total_iters: how many SGD iterations to perform.

# iters_per_test: We will 'test' the model on test data every few iterations as specified by this.

values_to_plot = {'loss':[], 'acc_train': [], 'acc_test': []}

optimizer = optim.Adam(classifier.params, lr=learning_rate)

for t in range(total_iters):

# Sample batch for this SGD iteration

# 随机抽样一批数

train_imgs_batch, train_lbls_batch = get_random_batch(train_imgs, train_lbls, batch_size, rng)

# Forward pass执行前向传递以获得预测

if pretrained_AE is None:

inp_to_classifier = train_imgs_batch

else:

_, z_codes = pretrained_AE.forward_pass(train_imgs_batch) # AE encodes. Output will be given to Classifier

inp_to_classifier = z_codes

y_pred = classifier.forward_pass(inp_to_classifier)

# Compute loss:使用交叉熵函数计算损失

y_real = train_lbls_batch

loss = loss_func(y_pred, y_real) # Cross entropy

# Backprop and updates.使用反向传播计算梯度

optimizer.zero_grad()

grads = classifier.backward_pass(loss)

optimizer.step()

# ==== Report training loss and accuracy ======

# y_pred and loss can be either np.array, or torch.tensor (see later). If tensor, make it np.array.

y_pred_numpy = y_pred if type(y_pred) is np.ndarray else y_pred.detach().numpy()

y_pred_lbls = np.argmax(y_pred_numpy, axis=1) # y_pred is soft/probability. Make it a hard one-hot label.

y_real_lbls = np.argmax(y_real, axis=1)

acc_train = np.mean(y_pred_lbls == y_real_lbls) * 100. # percentage

loss_numpy = loss if type(loss) is type(float) else loss.item()

print("[iter:", t, "]: Training Loss: {0:.2f}".format(loss), "\t Accuracy: {0:.2f}".format(acc_train))

# =============== Every few iterations, show reconstructions ================#

if t==total_iters-1 or t%iters_per_test == 0:

if pretrained_AE is None:

inp_to_classifier_test = test_imgs

else:

_, z_codes_test = pretrained_AE.forward_pass(test_imgs)

inp_to_classifier_test = z_codes_test

y_pred_test = classifier.forward_pass(inp_to_classifier_test)

# ==== Report test accuracy ======

y_pred_test_numpy = y_pred_test if type(y_pred_test) is np.ndarray else y_pred_test.detach().numpy()

y_pred_lbls_test = np.argmax(y_pred_test_numpy, axis=1)

y_real_lbls_test = np.argmax(test_lbls, axis=1)

acc_test = np.mean(y_pred_lbls_test == y_real_lbls_test) * 100.

print("\t\t\t\t\t\t\t\t Testing Accuracy: {0:.2f}".format(acc_test))

# Keep list of metrics to plot progress.

values_to_plot['loss'].append(loss_numpy)

values_to_plot['acc_train'].append(acc_train)

values_to_plot['acc_test'].append(acc_test)

# In the end of the process, plot loss accuracy on training and testing data.

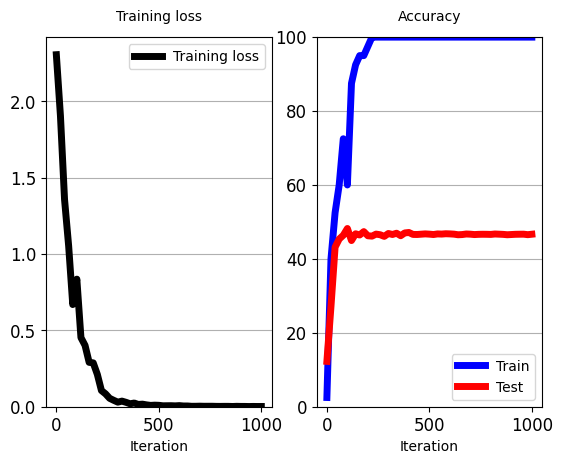

plot_train_progress_2(values_to_plot['loss'], values_to_plot['acc_train'], values_to_plot['acc_test'], iters_per_test)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

# Train Classifier from scratch (initialized randomly)

# Create the network

rng = np.random.RandomState(seed=SEED)

net_classifier_from_scratch = Classifier_3layers(D_in=H_height*W_width,

D_hid_1=256, # TODO: Use same as layer 1 of encoder of wide AE (Task 4)

D_hid_2=32, # TODO: Use same as layer 1 of encoder of wide AE (Task 4)

D_out=C_classes,

rng=rng)

# Start training

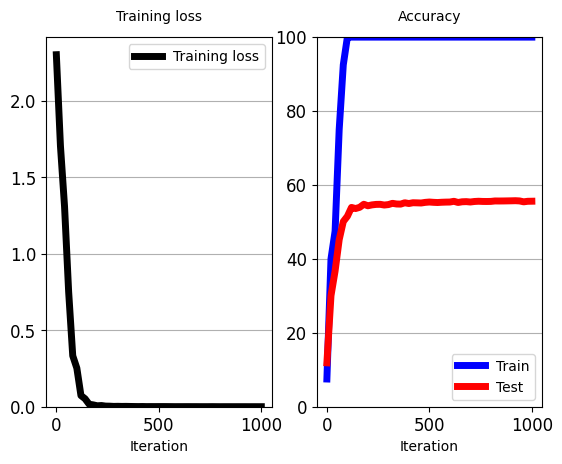

train_classifier(net_classifier_from_scratch,

None, # No pretrained AE

cross_entropy,

rng,

train_imgs_flat[:100],

train_lbls_onehot[:100],

test_imgs_flat,

test_lbls_onehot,

batch_size=40,

learning_rate=3e-3,

total_iters=1000,

iters_per_test=20)

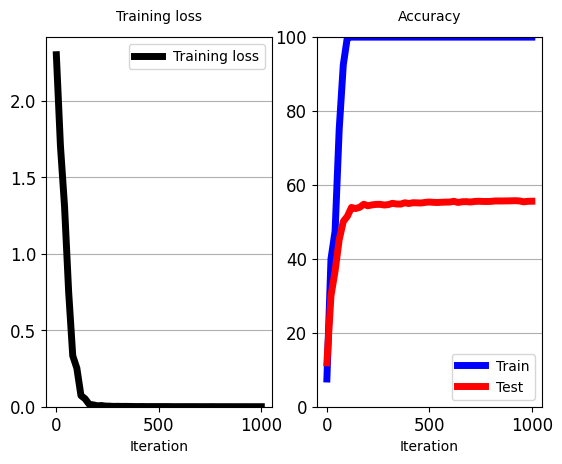

'''

[iter: 16 ]: Training Loss: 1.93 Accuracy: 32.50

[iter: 17 ]: Training Loss: 2.04 Accuracy: 30.00

[iter: 18 ]: Training Loss: 1.91 Accuracy: 27.50

[iter: 19 ]: Training Loss: 1.77 Accuracy: 32.50

[iter: 20 ]: Training Loss: 1.71 Accuracy: 40.00

Testing Accuracy: 30.05

[iter: 21 ]: Training Loss: 1.67 Accuracy: 42.50

[iter: 22 ]: Training Loss: 1.64 Accuracy: 57.50

...

[iter: 997 ]: Training Loss: 0.00 Accuracy: 100.00

[iter: 998 ]: Training Loss: 0.00 Accuracy: 100.00

[iter: 999 ]: Training Loss: 0.00 Accuracy: 100.00

Testing Accuracy: 55.60

'''

5.9 当标签有限时,使用无监督 AE 作为监督分类器的“预训练特征提取器”(Use Unsupervised AE as ‘pre-trained feature-extractor’ for a supervised Classifier when labels are limited)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

# Train classifier on top of pre-trained AE encoder

class Classifier_1layer(Network):

# Classifier with just 1 layer, the classification layer

def __init__(self, D_in, D_out, rng):

# D_in: dimensions of input

# D_out: dimension of output (number of classes)

#### TODO: Fill in the blanks ######################

w_out_init = rng.normal(loc=0.0, scale=0.01, size=(D_in+1, D_out))

w_out = torch.tensor(w_out_init, dtype=torch.float, requires_grad=True)

####################################################

self.params = [w_out]

def forward_pass(self, batch_inp):

# compute predicted y

[w_out] = self.params

# In case input is image, make it a tensor.

batch_inp_t = torch.tensor(batch_inp, dtype=torch.float) if type(batch_inp) is np.ndarray else batch_inp

unary_feature_for_bias = torch.ones(size=(batch_inp_t.shape[0], 1)) # [N, 1] column vector.

batch_inp_ext = torch.cat((batch_inp_t, unary_feature_for_bias), dim=1) # Extra feature=1 for bias. Lec5, slide 4.

# Output classification layer

logits = batch_inp_ext.mm(w_out)

# Output layer activation function

# Softmax activation function. See Lecture 5, slide 18.

exp_logits = torch.exp(logits)

y_pred = exp_logits / torch.sum(exp_logits, dim=1, keepdim=True)

# sum with Keepdim=True returns [N,1] array. It would be [N] if keepdim=False.

# Torch broadcasts [N,1] to [N,D_out] via repetition, to divide elementwise exp_h2 (which is [N,D_out]).

return y_pred

# Create the network

rng = np.random.RandomState(seed=SEED) # Random number generator

# As input, it will be getting z-codes from the AE with 32-neurons bottleneck from Task 4.

classifier_1layer = Classifier_1layer(autoencoder_wide.D_bottleneck, # Input dimension is dimensions of AE's Z

C_classes,

rng=rng)

########### TODO: Fill in the gaps to start training ####################

# Give to the function the 1-layer classifier, as well as the pre-trained AE that will work as feature extractor.

# For the pre-trained AE, give the instance of 'wide' AE that has 32-neurons bottleneck, which you trained in Task 4.

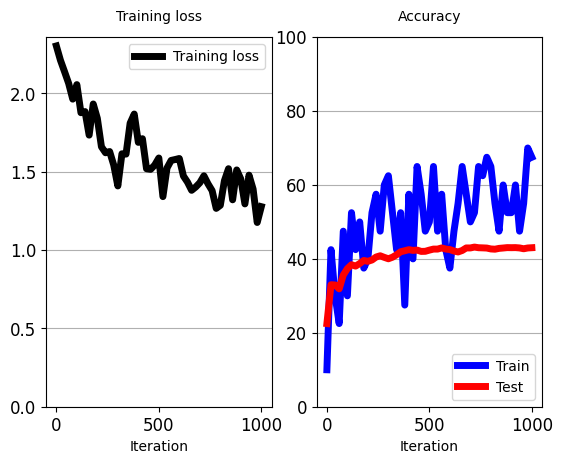

train_classifier(classifier_1layer, # 要训练的单层分类器

autoencoder_wide, # 预训练的AE,将被用作特征提取器

cross_entropy, # 计算损失的函数

rng,

train_imgs_flat[:100],

train_lbls_onehot[:100],

test_imgs_flat,

test_lbls_onehot,

batch_size=40,

learning_rate=3e-3, # 5e-3, is the best for 1-layer classifier and all data.

total_iters=1000,

iters_per_test=20)

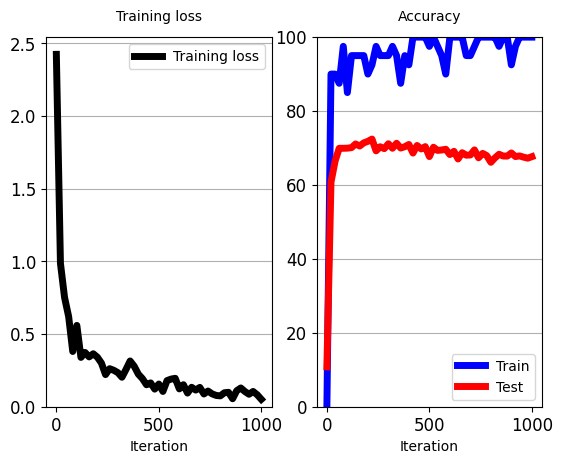

'''

[iter: 0 ]: Training Loss: 2.42 Accuracy: 0.00

Testing Accuracy: 10.79

[iter: 1 ]: Training Loss: 2.25 Accuracy: 12.50

[iter: 2 ]: Training Loss: 2.08 Accuracy: 20.00

[iter: 3 ]: Training Loss: 2.16 Accuracy: 7.50

[iter: 4 ]: Training Loss: 1.99 Accuracy: 35.00

[iter: 5 ]: Training Loss: 1.78 Accuracy: 60.00

[iter: 6 ]: Training Loss: 1.90 Accuracy: 32.50

[iter: 7 ]: Training Loss: 1.79 Accuracy: 47.50

[iter: 8 ]: Training Loss: 1.74 Accuracy: 45.00

[iter: 9 ]: Training Loss: 1.67 Accuracy: 50.00

[iter: 10 ]: Training Loss: 1.57 Accuracy: 62.50

[iter: 11 ]: Training Loss: 1.51 Accuracy: 67.50

[iter: 12 ]: Training Loss: 1.37 Accuracy: 72.50

[iter: 13 ]: Training Loss: 1.43 Accuracy: 77.50

[iter: 14 ]: Training Loss: 1.37 Accuracy: 65.00

[iter: 15 ]: Training Loss: 1.33 Accuracy: 60.00

[iter: 16 ]: Training Loss: 1.44 Accuracy: 65.00

[iter: 17 ]: Training Loss: 1.41 Accuracy: 72.50

[iter: 18 ]: Training Loss: 1.39 Accuracy: 70.00

[iter: 19 ]: Training Loss: 1.17 Accuracy: 72.50

[iter: 20 ]: Training Loss: 0.99 Accuracy: 90.00

Testing Accuracy: 60.76

[iter: 21 ]: Training Loss: 1.09 Accuracy: 87.50

[iter: 22 ]: Training Loss: 1.01 Accuracy: 80.00

...

[iter: 997 ]: Training Loss: 0.04 Accuracy: 100.00

[iter: 998 ]: Training Loss: 0.09 Accuracy: 100.00

[iter: 999 ]: Training Loss: 0.05 Accuracy: 100.00

Testing Accuracy: 67.65

'''

5.10 使用无监督 AE 编码器的参数来初始化监督分类器的权重,然后使用有限的标签进行细化(Use parameters of an Unsupervised AE’s encoder to initialize weights of a supervised Classifier, followed by refinement using limited labels)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

# Pre-train a classifier.

# The below classifier has THE SAME architecture as the 3-layer Classifier that we trained...

# ... in a purely supervised manner in Task-6.

# This is done by inheriting the class (Classifier_3layers), therefore uses THE SAME forward_pass() function.

# THE ONLY DIFFERENCE is in the construction __init__.

# This 'pretrained' classifier receives as input a pretrained autoencoder (pretrained_AE) from Task 4.

# It then uses the parameters of the AE's encoder to initialize its own parameters, rather than random initialization.

# The model is then trained all together.

class Classifier_3layers_pretrained(Classifier_3layers):

def __init__(self, pretrained_AE, D_in, D_out, rng):

D_in = D_in

D_hid_1 = 256

D_hid_2 = 32

D_out = D_out

w_out_init = rng.normal(loc=0.0, scale=0.01, size=(D_hid_2+1, D_out))

w_1 = torch.tensor(pretrained_AE.params[0], dtype=torch.float, requires_grad=True)

w_2 = torch.tensor(pretrained_AE.params[1], dtype=torch.float, requires_grad=True)

w_out = torch.tensor(w_out_init, dtype=torch.float, requires_grad=True)

self.params = [w_1, w_2, w_out]

# Create the network

rng = np.random.RandomState(seed=SEED) # Random number generator

classifier_3layers_pretrained = Classifier_3layers_pretrained(autoencoder_wide, # The AE pre-trained in Task 4.

train_imgs_flat.shape[1],

C_classes,

rng=rng)

# Start training

# NOTE: Only the 3-layer pretrained classifier is used, and will be trained all together.

# No frozen feature extractor.

train_classifier(classifier_3layers_pretrained, # classifier that will be trained.

None, # No pretrained AE to act as 'frozen' feature extractor.

cross_entropy,

rng,

train_imgs_flat[:100],

train_lbls_onehot[:100],

test_imgs_flat,

test_lbls_onehot,

batch_size=40,

learning_rate=3e-3,

total_iters=1000,

iters_per_test=20)

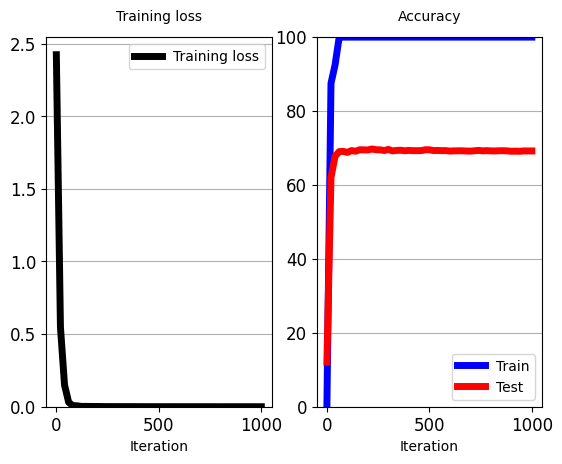

'''

[iter: 0 ]: Training Loss: 2.42 Accuracy: 0.00

Testing Accuracy: 12.06

[iter: 1 ]: Training Loss: 2.19 Accuracy: 12.50

[iter: 2 ]: Training Loss: 2.04 Accuracy: 22.50

[iter: 3 ]: Training Loss: 2.11 Accuracy: 15.00

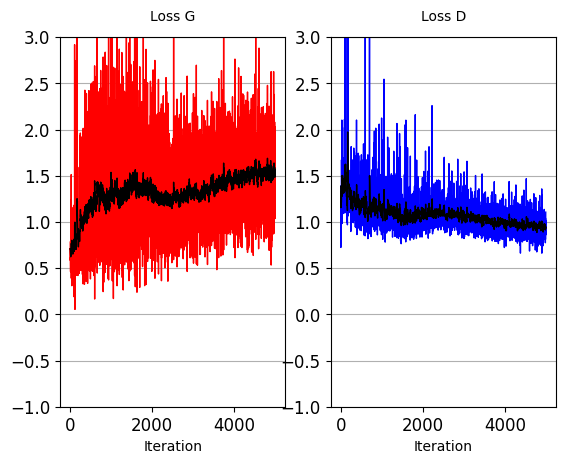

[iter: 4 ]: Training Loss: 1.89 Accuracy: 50.00